saurabhp

Mars 25, 2019, 12:51

1

It is recommended to use HTTrack to take a dump of static HTML and host that as a static archived website. But the layout for crawlers is not very pretty to host it as a static site. I will be working on improving the layout and adding necessary data to the static website. You can see the crawler layout at https://meta.discourse.org/?escaped_fragment which I will try to improve.

This is just a placeholder to link with changes I make so that someone reviewing it can get more context.

Let me know if you have any suggestions on this topic.

Thanks

6 « J'aime »

saurabhp

Mars 29, 2019, 12:33

2

I have created few pull requests related to this and added screenshots in them:

master ← mrfinch:saurabh/static-topic-layout-1

merged 08:32PM - 27 Mar 19 UTC

…ike live discourse

- also removes post links

- adds created on since last a… ctivity doesn't make much sense on archived site

I tested generated HTML at https://search.google.com/structured-data/testing-tool/u/0/ and it detects the same content as before.

This is a part of https://meta.discourse.org/t/improving-discourse-static-html-archive/112497, I will be making incremental changes for the same instead of creating one big PR.

Adding screenshot for the new layout. cc @coding-horror let me know if you have any feedback for it.

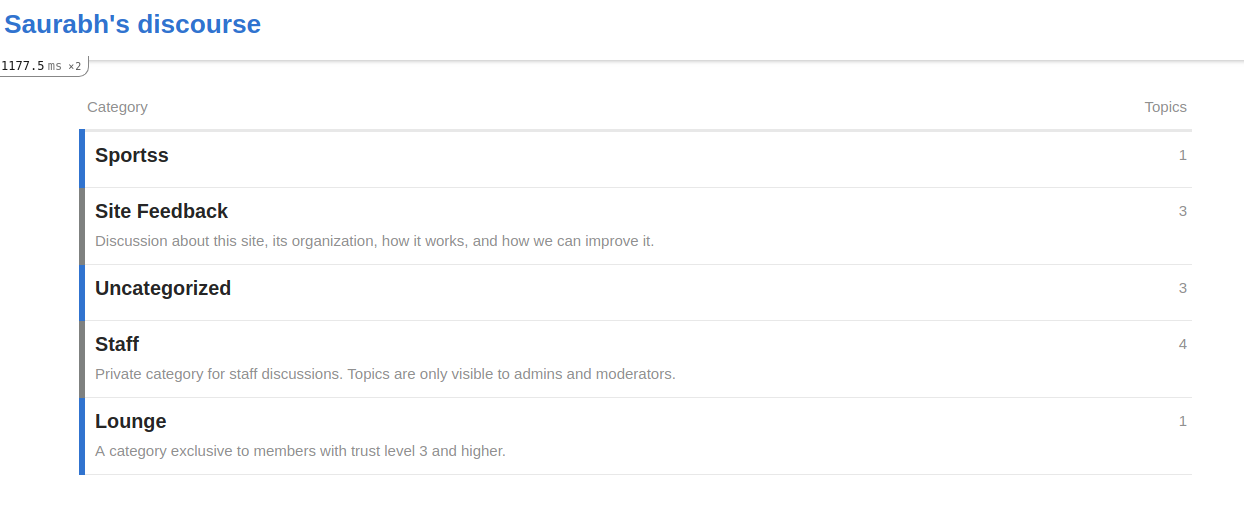

master ← mrfinch:saurabh/static-categories-layout-1

merged 01:57PM - 04 Apr 19 UTC

…escaped_fragment_ page

This is a part of https://meta.discourse.org/t/improv… ing-discourse-static-html-archive/112497

The old layout can be viewed at https://meta.discourse.org/categories/?_escaped_fragment_

I have removed `itemprop='item'` since it was not needed (all information is provided in ListItem already) and was showing up as an error here: https://search.google.com/structured-data/testing-tool/u/0/#url=https%3A%2F%2Fmeta.discourse.org%2Fcategories%2F%3F_escaped_fragment_

Attaching screenshot with the new layout.

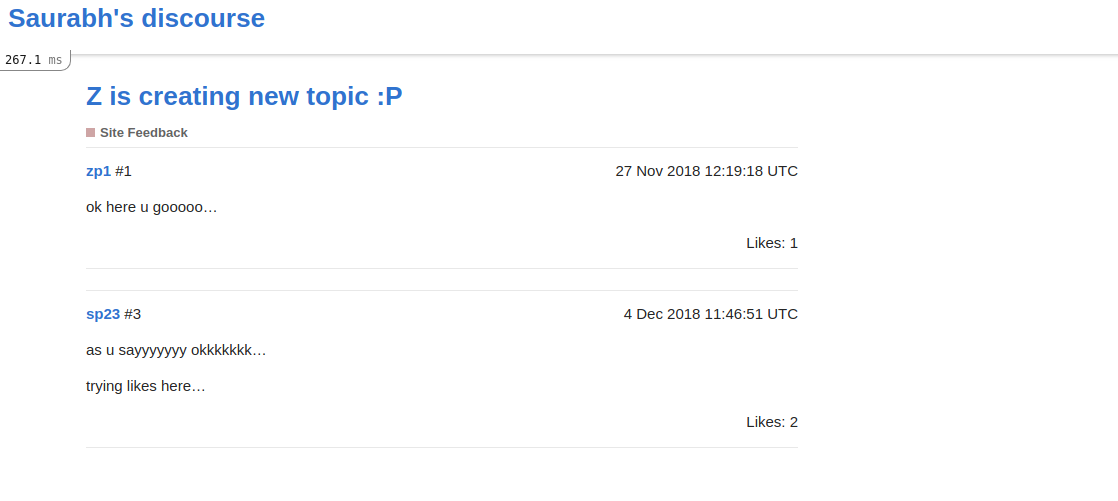

master ← mrfinch:saurabh/static-post-layout-1

merged 09:58AM - 03 Apr 19 UTC

This is a part of https://meta.discourse.org/t/improving-discourse-static-html-a… rchive/112497

Attaching a screenshot of the new layout. I think there should be a better way to display likes, let me know if you have any suggestions.

The old layout can be seen here: https://meta.discourse.org/t/suspect-account-anonymous-account/112813/?_escaped_fragment_

Let me know if you have any suggestions.

5 « J'aime »

tgxworld

Avril 2, 2019, 5:02

3

Sorry in advanced for my question since I’m not very familiar with HTTrack. Why do we need to use HTTrack to take a dump of the static HTML page and host that as a static archived website?

5 « J'aime »

saurabhp

Avril 2, 2019, 6:29

4

Hey,

Hi all – just jumping in here to say that @mcmcclur ’s code was exactly what I was looking for! So thank you very much for sharing https://github.com/kitsandkats/ArchiveDiscourse , forked from @mcmcclur ’s original repo and stored as a python file instead of a Jupyter notebook.

I’m very happy with how it turned out. Thanks again!

When possible, we recommend archiving old forums rather than importing them into Discourse. Sometimes this isn’t possible, for various reasons, but getting a fresh start and moving into a new community platform without carrying all your old baggage across is often appealing.

[image]

Maybe your community needs a reboot.

But how do you:

Keep the valuable information at old forums around without keeping that ancient forum software running, too, with all its future security vulnerabilities?

A…

HTTrack will basically just crawl your website and create a static HTML dump which you can host as a static website.

Quoting from the link above on why people want it.

I’ve been using Discourse for a couple of years now as a discussion board when teaching my college math classes so, every few months, I retire one or two sites and start one or two more. Obviously, the discussions on the retiring sites have value so I really needed some way to save them

Let me know if you have any other questions.

1 « J'aime »

You do not “need” to use httrack tool you can use recursive wget and other similar command line Linuxy spidering tools as well.

3 « J'aime »

saurabhp

Avril 7, 2019, 6:33

6

Just an update regarding this.

All 3 pull requests have been merged. I’m adding screenshots with the new static archive look here below. Let me know if any of you have any suggestions on things to improve.

7 « J'aime »